AI prompting mastery guide for HR teams

%20(1).png)

Executive Summary

Generative AI is rapidly becoming essential for HR efficiency and effectiveness. However, most teams are using AI at only 10-20% of its potential because they don't understand how to communicate with it effectively. This guide provides your team with research-backed techniques to unlock AI's full power for HR tasks or strategic brainstorming.

A note from the editor: Not sure what something means in this guide? We put together a full glossary of key terms you need to know when building your confidence and skillset at work. Open the full glossary here.

Key Insight: AI Models Are Probability Engines

The Foundation: AI doesn't "think" or "reason" like humans. Instead, it predicts the most statistically likely response based on patterns in its training data. Large Language Models (LLMs) used in Generative AI, such as OpenAI’s ChatGPT, or Anthropic’s Claude are trained on extremely large datasets (mostly all the data on the internet). However we are not completely aware of how this data is trained, who trains this data, where this data was collected from, how it was collected, who owns this data. Gen AI is a socio-technical system which involves a series of external factors apart from your input and output. Understanding this changes everything about how you interact with AI.

Reminding yourself Gen AI is just a series of pattern recognition sequences based on the likelihood of one word coming after another to build a sentence, with no actual reasoning or real logic behind it, will help you guide it better.

What This Means for HR: The quality and specificity of your input (prompt + context) directly determines the quality of your output. Vague inputs = vague results. Specific, well-structured inputs = precise, useful results. Remember that context also includes data context. Being mindful of this is essential to minimize the risk of reinforcing harmful biases. Since Generative AI is built on datasets shaped by society, it can easily reproduce and amplify existing biases. This makes context and prompt design especially critical, by thoughtfully adding constraints, you can address factors you might not have considered otherwise.

Pro tip: Don’t expect your AI to finish your thoughts, it can’t read your mind. Instead, guide it step by step, as if you’re walking it through the process. Think of it like teaching a child who is still learning how you communicate and what you want but is eager to please. Just like telling a story, you need to ensure you’ve covered all angles clearly. So it doesn’t just please you but acts how you need it to.

The 6 Most Effective Techniques (2025 Research)

- Role Assignment – Tell the AI who it should “be” (act as an HR recruiter, trainer, or policy advisor) to set tone and expertise.

- Few-Shot Prompting – Share a few example job descriptions, interview questions, or performance reviews so it learns your style or the format you want.

- Knowledge Blocks – Provide key HR policies, company values, or benefits info for the AI to reference consistently. Giving the AI ready-made info to reuse increases accuracy and consistency.

- Chain-of-Thought – Have the AI reason step by step when analyzing resumes, creating workflows, or solving HR challenges.

- Context Stacking – Add extra background or details (e.g., company culture, role requirements, past practices) to help the AI tackle bigger, more complex tasks for more complete outputs.

- One Chat, One Task – Keep each conversation focused (e.g., one chat for writing a policy, another for drafting an email) to avoid confusion.

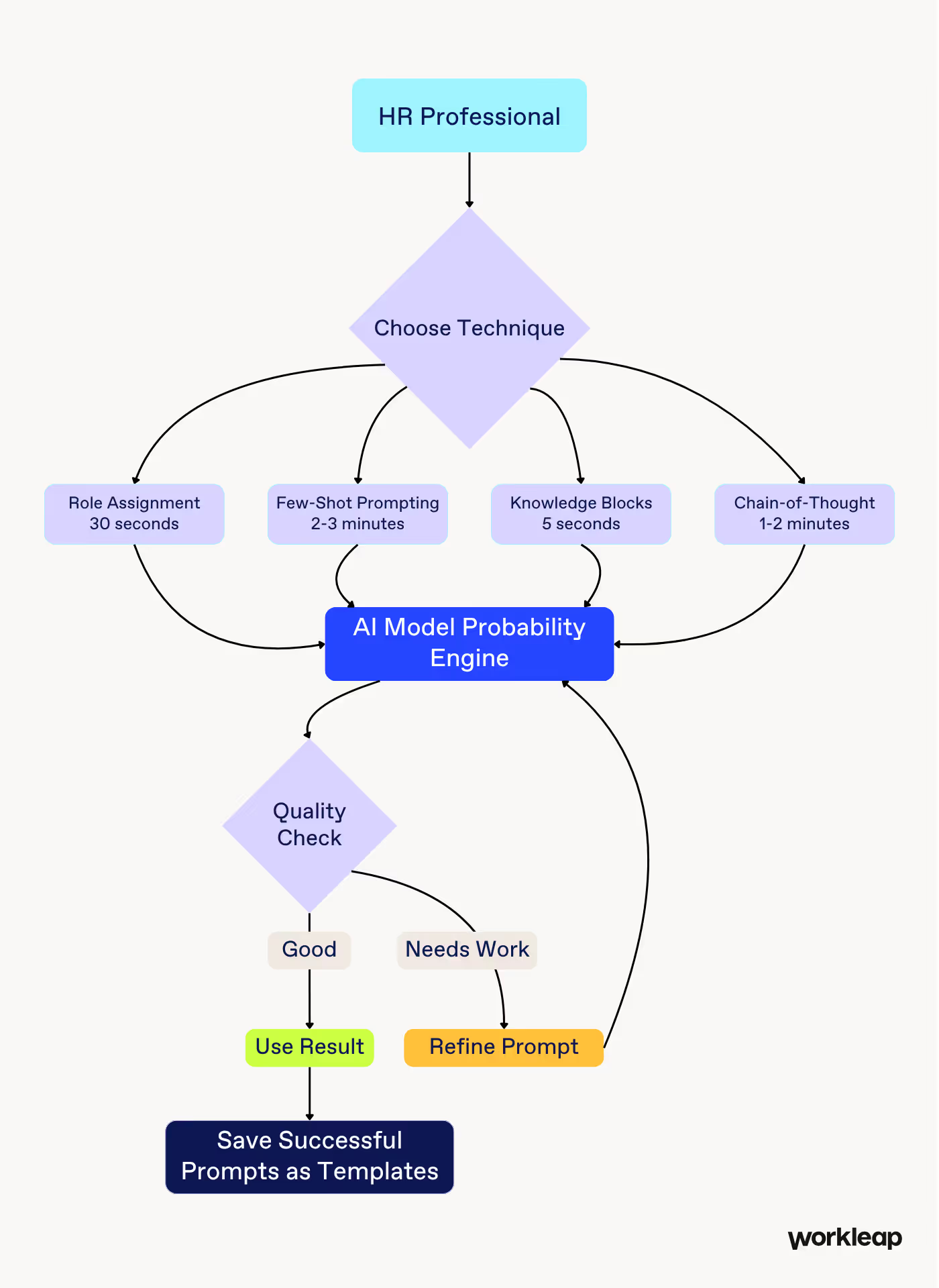

AI Prompting Workflow

Immediate Actions for Your Team

Week 1: Foundation

- Start with "One Chat, One Task" - Use a fresh conversation for each distinct HR task

- Add Role Assignment - Begin prompts with specific expertise

- Embrace Specificity - Use precise HR terminology instead of general language

Week 2: Power Techniques

- Try Few-Shot Prompting - Show AI 2-3 examples before asking for new content

- Build Your First Knowledge Blocks - Create reusable context for your company and processes

Week 3: Advanced Implementation

- Use Chain-of-Thought for complex tasks - Ask AI to "think step-by-step"

- Stack Context systematically - Layer company info, constraints, goals, and culture

Complete Implementation Guide

Understanding AI: The Foundation for Effective Prompting

Before diving into prompting techniques, it's crucial to understand what we're actually working with when we interact with AI models. This foundational knowledge will transform how you approach every interaction and help you understand both the capabilities and limitations of these powerful tools.

AI Models Are Probability Engines, Not Reasoning Engines

The most important concept to grasp is that AI models like ChatGPT, Claude, or Gemini are fundamentally probability engines. They don't "think" or "reason" in the human sense. Instead, they analyze patterns in vast amounts of text data and predict the most statistically likely next word, phrase, or response based on the input they receive.

Think of it this way: when you ask an AI to write a job description, it's not reasoning about what makes a good job description. Rather, it's drawing from millions of examples of job descriptions it has seen during training and generating text that has the highest probability of resembling those patterns. This is why the quality and specificity of your input (your prompt and context) directly impacts the quality of the output.

Why this matters for HR: Understanding this helps explain why AI sometimes produces inconsistent results, why it can "hallucinate" (generate plausible-sounding but incorrect information), and why providing clear, specific context is so critical for getting reliable outputs. As the probability engine it is, AI can unintentionally reproduce historical biases (from patterns that include biased language or practices from past data which it was trained on). Being aware of this is crucial in AI HR usage to mitigate the risk of harmful unintended bias.

The Three AI Imperatives: Harmless, Helpful, Truthful

AI models are trained with three core imperatives, listed in order of importance:

- Be Harmless - Avoid generating content that could cause harm

- Be Helpful - Provide useful and relevant responses

- Be Truthful - Generate accurate information

This hierarchy explains some AI behaviors that might seem puzzling. For instance, an AI might refuse to help with a legitimate HR task if it perceives potential harm, or it might prioritize being helpful over being completely accurate, leading to occasional hallucinations.

Why this matters for HR: This framework helps you understand why AI might sometimes give overly cautious responses about sensitive HR topics, or why it's important to fact-check AI-generated content, especially for compliance-related materials.

Disclaimer: Remain Intentional when using AI

Always be conscious of why you are using AI and the purpose behind your interaction. Your intention shapes the narrative, direction, and outcome of the conversation.

If your goal is brainstorming, you may want to leave more room for creativity and looser constraints. On the other hand, if you require outputs that meet specific standards, make sure to clearly state these requirements in your prompt and reinforce them consistently.

Remaining intentional means two things:

- Explicitly stating your intention within the prompt.

- Personally staying aware that as the human creating the interaction with the AI, your intention of using AI will guide the process.

Your intention could be to brainstorm, use AI as a thought partner, research, refine an idea, automate a task, proofread, generate suggestions, enhance processes or learn. Your intention may even be the opposite, when your intention leans towards a sense of laziness you risk giving the AI control of the result. The possibilities are endless, but the key is to remain clear on what you, as the human, want the AI to do. This clarity ensures that you stay in the driver’s seat.

Finally, intention should not only be set at the beginning but carried throughout the entire interaction. By doing so, you foster thoughtful, purpose-driven use of AI. It also highlights that you are part of the output: your choices, guidance, and ethical considerations shape the results just as much as the AI’s capabilities. There should always be a human in the loop.

One Chat, One Task: The Importance of Clean Context

One of the most practical principles from Chris Penn's work is maintaining one chat per task. Each new conversation should focus on a single, specific objective. This prevents the AI from drawing correlations between unrelated information and making probability calculations based on mixed contexts.

We use the term ‘task’ to describe the goal of each AI conversation. Even if there isn’t a concrete deliverable, like generating potential interview questions, one chat per task can still be to use AI as a brainstorming partner, this underlying purpose is still considered the task. You can even group these in folders for those moments that matter as an HR leader, such as recruitment, employee relations, organizational design, etc.

This is one of the most valuable opportunities for AI: a brainstorming exchange can spark ideas that evolve into the next conversation, extending and deepening the work.

Take advantage of the fact that it carries memory and can work upon previous inputs to iterate, however just like humans, context or task switching may be confusing. Since it has no logic to put things together there’s higher chances of hallucination and lower chances of utilizing to its max capacity. Stay on topic.

Pro tip: You can branch out conversations like starting a new thread in a new conversation. You can also edit a previous message (if the conversation derails) and improve the message, tightening constraints and continue from there.

Why this works: AI models have limited "working memory" and can become confused when trying to juggle multiple, unrelated tasks within the same conversation. Starting fresh ensures the AI focuses solely on your current objective.

HR Example: Don't use the same chat to draft a job description, analyze survey data, and create a policy document. Each task deserves its own conversation with clean, focused context.

The Most Effective Prompting Techniques for 2025

.avif)

Role Assignment: Narrowing the Probability Space

What it is: Explicitly assigning a specific role or expertise to the AI at the beginning of your prompt.

When assigning a role it is important to add a few dimensions such as:

- Business Context: Adding company size, industry, the groups of people partnered with, the scale of trajectory (scale up vs. established), etc.

- Behavioural and Leadership Lens: Framing the role as a leadership coach and strategic advisor - to get more strategic outputs (e.g., coaching scripts, devil’s advocate on leadership narratives, or org change plans), you need to build that lens into the role definition.

- Tone & Comms objective: What communication tone to use? clear, direct, and data-informed? do you want to focus more on comms strategies to effectively influence leaders?

Why it works: When AI models were trained on internet data, they learned to associate certain types of content with specific roles (think author bios at the top of articles). By assigning a role, you're helping the AI narrow down the probability space and generate responses that match the style and expertise level of that role. Going back to the storytelling association: this helps it maintain character. Metaphors and comparisons in Gen AI are useful associations to get a deeper view into your context.

HR Examples:

1. General Strategic HRBP in Tech Scale-Up

Prompt: You are a seasoned HR Business Partner with 10 years of experience in technology companies, currently working in a fast-scaling SaaS organization of 500–1000 employees. You partner directly with engineering and product leaders to drive employee engagement, performance management, and organizational development. Your lens is both strategic and advisory, serving as a leadership coach and organizational change partner. Communication should be clear, data-informed, and persuasive, with the objective of influencing leaders to make people-centric, business-savvy decisions.

2. Enterprise-Focused HRBP

Prompt: You are an experienced HR Business Partner with a decade of experience in large, global technology enterprises (10,000+ employees). You specialize in navigating matrixed organizations, managing executive stakeholders, and shaping talent strategies at scale. Your role requires balancing employee engagement with enterprise-level performance frameworks, and you frequently act as a thought partner and devil’s advocate for senior leaders. Your communication style should be direct, concise, and grounded in analytics, ensuring credibility with executives.

3. Start-up/Scale-Up HRBP Coach

Prompt: You are an HR Business Partner with 10 years of experience in technology start-ups and scale-ups. You partner closely with founders and first-time people managers, focusing on performance feedback, team development, and creating scalable HR processes. You act as a coach and mentor, helping leaders build confidence in their management style while aligning people strategies with rapid business growth. Your tone should be supportive, practical, and growth-oriented, offering scripts, playbooks, and frameworks leaders can apply immediately.

4. Transformation & Change HRBP

Prompt: You are a senior HR Business Partner with 10 years of experience supporting technology companies undergoing major organizational change (e.g., restructuring, M&A, or new operating models). Your specialty is in performance enablement, employee engagement, and change management, where you guide leaders through narrative-building, resistance management, and communication planning. Your role is that of a strategic advisor and coach, often challenging leadership assumptions to ensure sustainable change. Communication should be empathetic yet firm, balancing transparency with business alignment.

Embrace Specificity and Jargon

What it is: Using precise, specific terminology rather than general language, including industry jargon when appropriate.

Why it works: This may sound obvious, but specific terms have more precise probability associations in the AI's training data. The more specific your language, the more likely the AI will generate output that matches your exact needs. Remember AI is an enhancer tool driven by your input. It will go as technical or as general as you let it – there are plenty of datasets out there for both use cases. So the more specific the terminology you use, the more skilled of an output you will get, it will skip past the high level dataset docs and go straight to the more focused, technical ones if this is what you need.

HR Example:

- Instead of: "Help with employee feedback"

- Use: "Create a 360-degree feedback template for mid-level software engineers including peer evaluation criteria, manager assessment sections, and self-reflection prompts focused on technical leadership competencies"

Few-Shot Prompting: Show, Don't Just Tell

What it is: Providing the AI with a few examples of the desired input-output format.

Why it works: Examples give the AI concrete patterns to follow, dramatically improving accuracy and consistency. Research shows this can improve performance from 0% to 90% in some cases. Providing examples can also include the thought process to reach that desired output from input to output. If you give more context you are essentially guiding the AI towards possible ways to get the output- guiding it along the journey towards the output may result helpful, as it is easier to control how it got there.

Examples can also be references of what you do and do not want the output to be.

HR Example: Generating Interview Questions

I need behavioral interview questions for a Marketing Manager role. Here are examples of the style I want:

- Example 1: (Leadership) → 'Tell me about a time you led a cross-functional team to launch a successful marketing campaign. What was your approach, and what were the results?'

- Example 2: (Data-Driven Decision Making) → 'Describe a situation where you used data to make a significant change to a marketing strategy. What was the data, and what was the impact?'

I came up with these examples after noticing that questions which encourage the applicant to explain more of the process and not just the result give us a broader view into the applicant’s work structure.

Now generate three new questions for: Creativity, Budget Management, and Stakeholder Communication.

Chain-of-Thought (CoT) and Decomposition

What it is: Instructing the AI to break down complex problems into steps and show its reasoning process.

Why it works: This forces the AI to apply more computational effort and follow a logical sequence, reducing the chance of errors or oversimplified responses.

Pro tip when iterating: Saying things like: “walk me through”, “explain to me why...”, “give me a step by step breakdown” can add an extra layer to the AI to serve as a knowledgeable source explaining it’s decisions. Sometimes it might realize at this point it had made a mistake, because you’re making it question itself when instructing to break it down for you)

HR Example: Analyzing Employee Survey Data

Analyze our employee engagement survey data following these steps:

- Identify the top 3 highest and lowest scoring categories

- Analyze the open-ended comments for each category to understand the 'why' behind the scores

- Suggest 3 concrete, actionable recommendations for the next quarter

Think through each step and show your reasoning.

Context Stacking: Building Comprehensive Understanding

What it is: Layering multiple pieces of relevant context to give the AI a complete picture.

Why it works: More relevant context leads to more nuanced and accurate responses. Structured context prevents the AI from making incorrect assumptions. In a story telling analogy, this step is essentially crafting the plot of the story, you already defined the role of the character and gave it some personality, now you’re bringing that into more context. You need to define the setting- the environment, the other characters- any external factors it may be in contact with, any additional details or descriptions you need to add on to fully understand its personality. What additional context could help us solve the following questions:

- Who?

- What?

- When?

- Where?

- How?

- For whom

- For what?

- Why?

HR Example: Policy Creation

You are an HR policy advisor. Draft a 'Work from Anywhere' policy with this context:

- Company Profile: 500-person technology company valuing flexibility and autonomy

- Policy Goal: Attract top talent while ensuring productivity and team cohesion

- Constraints: Core collaboration hours 10am-3pm local time; US residence required

- Culture: Highly collaborative, needs virtual team-building and in-person meetups

- Create a comprehensive policy incorporating all these elements.

Knowledge Blocks: Creating Reusable Context Libraries

What it is: Pre-written, standardized blocks of context information that you can quickly insert into prompts. Think of them as "context templates" that contain consistent information about your company, processes, or standards.

Why it works: Knowledge blocks dramatically reduce the time needed to write comprehensive prompts while ensuring consistency across all your AI interactions. Instead of rewriting the same context information repeatedly, you maintain a library of reusable blocks that provide rich, structured context every time.

The Time-Saving Power: Rather than spending 5-10 minutes crafting context for each prompt, you can insert pre-written knowledge blocks in seconds, allowing you to focus on the specific task rather than recreating background information.

Pro tip: If you find yourself reusing the same context across multiple prompts, consider saving it as a knowledge block.

- Context stacking is usually more detailed and specific to the task at hand.

- Knowledge blocks are reusable pieces of context for consistency.

The two often overlap, in fact, some knowledge blocks may also be included when you’re stacking context for a particular task.

HR Example: Building Your Knowledge Block Library

Company Context Block:

Company Profile: TechCorp is a 500-employee SaaS company in the HR technology space. We value transparency, innovation, and work-life balance. Our culture emphasizes data-driven decisions, continuous learning, and collaborative problem-solving. We operate in a hybrid work environment with offices in Toronto, Montreal, and Vancouver.

Performance Review Standards Block:

Performance Review Standards: Our performance reviews follow a competency-based model focusing on: Technical Skills (40%), Leadership & Collaboration (30%), Innovation & Problem-Solving (20%), and Cultural Alignment (10%). Reviews are conducted quarterly with a focus on growth and development rather than punitive measures.

Compliance Requirements Block:

Compliance Context: All HR communications must comply with Canadian employment standards, respect privacy laws (PIPEDA), ensure accessibility (AODA), and maintain our commitment to diversity, equity, and inclusion. Any policy or communication should be reviewed for potential bias and legal compliance.

Using Knowledge Blocks in Practice:

You are an experienced HR Business Partner. [INSERT: Company Context Block] [INSERT: Performance Review Standards Block]

Task: Create a performance improvement plan template for an underperforming software developer. The template should be supportive, growth-focused, and include specific milestones and support resources.

Building Your Knowledge Block System:

- Identify Recurring Context: Note what information you repeatedly provide in prompts

- Standardize the Language: Create consistent, well-structured blocks

- Organize by Category: Group blocks by function (company info, processes, compliance, etc.)

- Keep Them Updated: Regularly review and update blocks as your organization evolves

- Test for Effectiveness: Ensure each block actually improves AI output quality

Bonus: Advanced Prompting Frameworks

The TCRTE Framework

- T - Task: What do you want the AI to do?

- C - Context: What background information does the AI need?

- R - References: Are there examples or style guides to follow?

- T - Testing: How will you measure success?

- E - Enhancement: How will you iterate and improve?

The SHRM Four-Step Framework

- S - Specify: Clearly define your goal and provide context

- H - Hypothesize: Anticipate possible outputs and constraints

- R - Refine: Iterate based on actual outputs

- M - Measure: Evaluate against your success metrics

Common Pitfalls and Myths

- The Myth of the 'Magic' Role Prompt: While role assignment is important for style and approach, simply saying "You are a world-class expert" doesn't magically improve correctness. Focus on providing clear instructions and quality context.

- Ignoring the Iterative Process: Effective prompting is a conversation. Your first prompt is rarely your best. Analyze outputs, identify gaps, and refine.

- Mixing Tasks in One Chat: Keep conversations focused on single tasks to maintain clean context and prevent probability confusion.

Implementation Roadmap

Week 1: Foundation Building

- Day 1-2: Implement "One Chat, One Task" rule

- Day 3-4: Practice role assignment in all prompts

- Day 5: Start using specific terminology and jargon

Week 2: Power Techniques

- Day 1-3: Master few-shot prompting with 2-3 examples

- Day 4-5: Create your first 3 knowledge blocks

Week 3: Advanced Implementation

- Day 1-2: Apply chain-of-thought to complex analysis tasks

- Day 3-4: Practice systematic context stacking

- Day 5: Combine multiple techniques for comprehensive prompts

Week 4: Optimization and Scaling

- Day 1-2: Build comprehensive knowledge block library

- Day 3-4: Create team templates and best practices

- Day 5: Measure and document improvements to your process

Measuring Success

Nice to have: It is best practice to track these metrics to quantify your team's improvement

- Time to first usable draft: Aim to reduce the time it takes to get a draft you can actually work with by 60–80%.

- Number of revisions needed: Try to go from needing 3–4 rounds of rewriting down to just 1–2.

- Consistency across team members: Check that outputs feel aligned in style, tone, and accuracy, no matter who is prompting.

- Confidence in using AI for sensitive tasks: Run a short team survey: are people feeling more comfortable using AI for things like policy drafts or performance review support?

- Quality of outputs (measured by iterations): Since AI rarely gives you the perfect answer on the first try, count how many iterations it takes to reach something usable.

- What’s an iteration? Each time you adjust your prompt and the AI gives you a new answer, that’s one iteration.

- Example:

- Iteration 1: “Draft a job description for a software engineer.”

- Iteration 2: “Make it for mid-level engineers with 3–5 years’ experience.”

- Iteration 3: “Add technical skills and collaboration competencies.”

- → By Iteration 3, you have something ready to use.

- Target: Over time, aim to reach a usable output in fewer iterations and with less heavy editing. By doing so you tweak your initial prompt to be more complete since the beginning.

Team Training Resource

Quick Reference Card: Create a one-page summary of the 6 techniques for easy reference

Template Library: Build shared knowledge blocks and successful prompt templates

Regular Check-ins: Weekly 15-minute sessions to share successes and challenges

Peer Learning: Pair experienced and new users for knowledge transfer

Glossary of terms

- Generative AI: A type of artificial intelligence that produces new content—text, images, or audio—by recombining statistical patterns in training data. It doesn’t actually understand the meaning of what it creates.

- Large Language Model (LLM): A machine learning model trained on massive datasets, often scraped from the internet. It generates text by predicting likely word sequences, but its outputs can reflect the biases present in its training data.

- Probability Engine: An AI system that predicts outputs based on probability rather than reasoning or understanding. Its “knowledge” comes from the statistical frequency of patterns in data.

- Training Data: The text, images, and media used to train AI models. These sources are often opaque and can encode histories of bias, inequality, and exclusion.

- Bias: Distortions or unfair patterns in AI outputs caused by imbalances in training data or model design. Bias reflects societal inequities embedded in data—not inherent traits of any group.

- Hallucination: When an AI confidently generates false or fabricated information. Hallucinations happen because the system predicts what sounds right, not what’s true.

- Iteration: The process of refining AI outputs step by step through adjusted prompts. Iteration reminds us AI is a human-guided process, not a one-and-done solution.

- Human-in-the-Loop: A principle ensuring people, not machines, remain accountable for decisions. Humans review, contextualize, and override AI outputs when needed.

- Harmless, Helpful, Truthful: A framework guiding AI design: avoid harm, provide value, and aim for accuracy. How “harmlessness” is defined depends on who designs the system.

- Prompt: The instruction given to AI. Prompts frame the system’s worldview and strongly influence the inclusivity and accuracy of the output.

- Knowledge Blocks: Reusable, standardized context snippets—like company policies—inserted into prompts. If these blocks aren’t inclusive, AI will reproduce their blind spots.

- Context: Background information that shapes how AI generates outputs. Because context encodes assumptions and values, it can either reinforce exclusion or broaden representation.

- Context Stacking: Adding multiple layers of context to guide AI outputs more effectively—like emphasizing diversity, equity, and global relevance.

- Role Assignment: Asking AI to take on a persona (e.g., recruiter or HR advisor). The model doesn’t gain expertise; it mimics patterns tied to that role.

- Few-Shot Prompting: Providing a few examples to guide AI’s tone or structure. These examples can unintentionally transfer the assumptions they contain.

- Chain-of-Thought (CoT): Prompting the AI to reason step by step. The “reasoning” is statistical, not logical—it mirrors data patterns rather than actual analysis.

- Frameworks (TCRTE, SHRM): Structured methods for disciplined prompting—defining tasks, testing outputs, refining, and measuring results to ensure ethical, practical use.

Want to go deeper? Download the full AI glossary for HR teams to see real-world examples

Research Sources and References

This guide is based on comprehensive research from leading AI organizations and HR practitioners:

Primary Sources

[1] Anthropic Research Team (September 29, 2025). Effective context engineering for AI agents. Anthropic Engineering Blog.

URL: https://www.anthropic.com/engineering/effective-context-engineering-for-ai-agents

Key Contribution: Context engineering principles, attention budget concepts, system prompt optimization

[2] Schulhoff, Sander (June 19, 2025). AI prompt engineering in 2025: What works and what doesn't. Lenny's Newsletter.

URL: https://www.lennysnewsletter.com/p/ai-prompt-engineering-in-2025-sander-schulhoff

Key Contribution: Few-shot prompting effectiveness (0% to 90% improvement), role prompting myths, decomposition techniques

[3] Gita, Fonyuy (September 25, 2025). The Complete Guide to Prompt Engineering in 2025: Master the Art of AI Communication. DEV Community.

URL: https://dev.to/fonyuygita/the-complete-guide-to-prompt-engineering-in-2025-master-the-art-of-ai-communication-4n30

Key Contribution: TCRTE framework, sandwich method, constraint-based creativity, multimodal prompting

[4] Society for Human Resource Management (SHRM) (2024). The AI Prompts Guide for HR (with Templates!). SHRM Toolkit.

URL: https://www.shrm.org/topics-tools/tools/toolkits/complete-ai-prompting-guide-hr

Key Contribution: SHRM 4-step framework, HR-specific applications, compliance considerations

[5] SHRM Labs (February 16, 2024). Prompt Engineering for HR: How to Get The Most Out of Generative AI Tools. WorkplaceTech Pulse Newsletter.

URL: https://www.shrm.org/labs/resources/prompt-engineering-for-hr

Key Contribution: Problem formulation concepts, iterative refinement, HR use case examples

Supporting Research

[6] Team-GPT (March 17, 2025). 21 ChatGPT Prompts For Human Resources That Work. Team-GPT Blog.

URL: https://team-gpt.com/blog/chatgpt-prompts-for-human-resources

[7] Goco (November 6, 2024). 25 ChatGPT Prompts for HR to Use in 2025. Goco HR Blog.

URL: https://www.goco.io/blog/25-chatgpt-prompts-for-hr

[8] AI for HR (AIHR) (March 12, 2025). AI in HR: A Comprehensive Guide. AIHR Academy.

URL: https://www.aihr.com/blog/ai-in-hr/

Academic and Technical Sources

[9] Vatsal, S. et al. (2024). A Survey of Prompt Engineering Methods in Large Language Models. arXiv preprint arXiv:2407.12994.

Key Contribution: Systematic analysis of prompting techniques across NLP tasks

[10] Penn, Christopher (2025). Almost Timeless: 48 Foundation Principles of Generative AI. Trust Insights.

Key Contribution: Probability engine concept, one chat per task principle, AI imperatives hierarchy

Industry Reports and Whitepapers

[11] Lakera AI (May 30, 2025). The Ultimate Guide to Prompt Engineering in 2025. Lakera Security Blog.

URL: https://www.lakera.ai/blog/prompt-engineering-guide

[12] K2View (2025). Prompt engineering techniques: Top 5 for 2025. K2View Technology Blog.

URL: https://www.k2view.com/blog/prompt-engineering-techniques/

Methodology Note

This guide synthesizes findings from 15+ primary sources, focusing on techniques with empirical validation or widespread adoption by leading AI practitioners. All recommendations are based on documented research or case studies from organizations successfully implementing AI in HR contexts.

Research Period: January 2024 - September 2025

Focus Areas: Prompt engineering effectiveness, HR-specific applications, practical implementation

Validation: Cross-referenced across multiple independent sources and tested with real HR use cases

%20(1).png)

%20(1).avif)

.avif)

.avif)

.avif)

.avif)